AI is changing not only the face of the workforce in large corporations. Easily accessible, inexpensive AI models allow for the implementation of tools even in small companies. The benefits are easy to predict: shortened time to acquire internal information, process automation, and increased effectiveness in making key decisions. Find out how to build the company’s ChatGPT.

In this guide:

- GPT in your company. How does it work?

- Fine-tuning

- Re-training does not add knowledge

- Company’s ChatGPT. Cloud vs. self-hosted

- AI in the cloud

- Locally hosted models

- Fingoweb AI Knowledge Database

- Access levels and security

- Data and its quality

- AI in your company

Large language models (LLMs), classification models, regression models, and many others have long been commercially available. However, many organizations, especially smaller ones, have been reluctant to use ML/AI in their daily work. For various reasons: costs, data security, lack of access to specialists.

Everything changed in 2022 with the growing popularization of AI-based tools, that started thanks to OpenAI. Currently, building your own ChatGPT is within reach even for small organizations. Such a tool can:

- Answer questions asked by employees about the organization and work within the company.

- Assist employees in accessing key data without having to dig through multiple layers of files.

- Support management in decision-making.

- Reduce the time between asking a question and obtaining information.

GPT (generative pretrained transformers) is a type of language model introduced by OpenAI in 2018. It is just one of hundreds of models that you can use to work in your organization.

GPT in your company. How does it work?

We need to distinguish ChatGPT from the GPT model itself. ChatGPT is an interface overlaid by OpenAI on the GPT-3.5 and GPT-4 models, allowing for convenient interaction. Even the least technical person can open a chat window and ask a question.

The models offered by OpenAI can be used in other ways – for example, by fine-tuning them and integrating them through an API. OpenAI provides a platform for building custom solutions, and we write more about it in our article on GPTs.

By using a pre-trained model, in this case a large language model (LLM), you have the ability to:

- Fine-tune the model. Fine-tuning involves changing or improving the parameters of the model's operation through training on new data. Examples are given below.

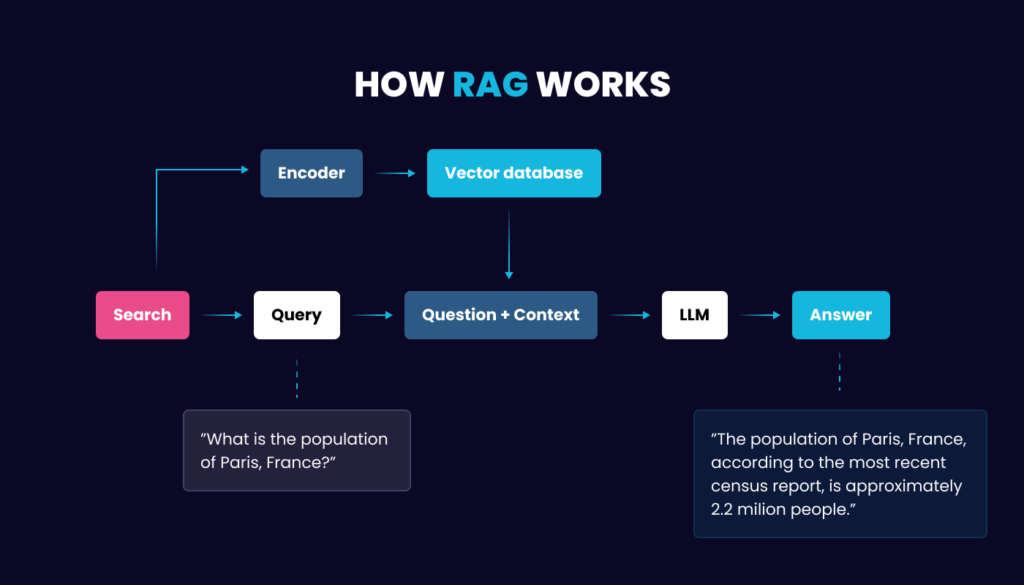

- Use the RAG method (Retrieval-Augmented Generation). The model uses a dedicated range of data, but the data itself does not change the model's operation.

Fine-tuning

To create GPT-4, a huge amount of data was used. Although OpenAI does not provide the exact number, we know that the older GPT-3 model was trained on hundreds of billions of words. GPT-4 was probably trained on an even larger amount of data from books, websites, and other texts to improve the algorithms' natural language understanding and content generation abilities.

The three main features of GPT are:

- Text generation.

- Understanding user context and intentions.

- Performing language-related tasks.

Fine-tuning involves adding additional capabilities or improving effectiveness in one of the above areas.

Below is a highly simplified example, in which we assume that we want to build a model for sentiment analysis of product reviews in our online store.

- Model selection. We choose the BERT model, which has been pretrained on a large range of textual data, learning natural language understanding and predicting the next sentence.

- Training data. We collect a dataset consisting of product reviews, with each review labeled with a sentiment rating (e.g., positive, negative, neutral). This dataset is smaller than the original BERT training corpus, but specifically tailored to the sentiment analysis task.

- Fine-tuning. BERT is adapted to initially present sentiment analysis results and is trained with new training data.

- Effect. After fine-tuning, the model can be used to analyze the sentiment of new product reviews.

Fine-tuning does not add knowledge, it adds skills. Fine-tuned language models have wide applications in business, as shown in the example above. However, if you want to build a custom ChatGPT for your organization, fine-tuning is not necessary.

What you are looking for is adding a dedicated knowledge base from which the model can draw information to answer your or your employees' questions.

RAG combines the capabilities of pretrained language models with information retrieval systems, where answers to questions are generated based on documents and information provided by your organization.

A solution that uses the pretrained LLaMA model and the RAG method? → Fingoweb AI Knowledge Database.

Company’s ChatGPT. Cloud vs. self-hosted

The biggest advantage of using the RAG method is ease of implementation and high effectiveness. RAG combined with an open-source model offers:

- A wide range of choices and models tailored to specific needs (NLP, text-to-speech, GPT, computer vision), trained on a large amount of data.

- Low implementation costs in terms of finances and time.

- Extensive technical documentation.

- The ability to choose a model based on data volume and technical capabilities, such as hardware limitations.

Regardless of the model selected, building a solution requires providing internal, company-specific data (e.g., customer information, sales volume, CEO's birthday). When picking a solution, you need to consider whether it is better to integrate with the cloud or create an internally hosted tool.

AI in the cloud

There is a wide range of cloud platforms offered as services by Amazon, Google, IBM, OpenAI, and other organizations. Amazon has SageMaker, Google has Vertex AI, IBM has Watson, and these are just a few examples of the most well-known and largest corporations.

Each platform provides comprehensive tools for building ML/DL and AI solutions. This includes the process of describing data, building models, training and validation, hosting, and integration.

AI in the cloud – major advantages:

- Proven tools from industry giants.

- Considerable selection of solutions, including models and integrations.

- In theory (if you have the budget), unlimited computing power.

AI in the cloud – major disadvantages:

- Locking into cloud service provider's services.

- The need to send data to the cloud.

- High costs—usually based on “usage” (per hour of device operation, per generated image, per interaction with the model, etc.).

ChatGPT for business - locally hosted models

An alternative to large corporations is open-source models that can be hosted locally. Furthermore, your internal ChatGPT can be accessible only within the intranet, with data managed by your organization.

There are various libraries and frameworks that can be used to run AI models. These include TensorFlow, PyTorch, and the PaddlePaddle platform.

Locally hosted AI – major advantages:

- Data security and full control.

- Numerous open-source solutions available on the market.

- No fixed costs.

Locally hosted AI – major disadvantages:

- The need to purchase and maintain hardware.

- Knowledge required for model selection, training, and deployment.

Depending on the model, even an average graphics card may be sufficient. LLAMA2-70B requires a dedicated solution, in my opinion, a minimum of 2 x 24 GB or 1×48GB on graphics cards. It all depends on the needs and the model.

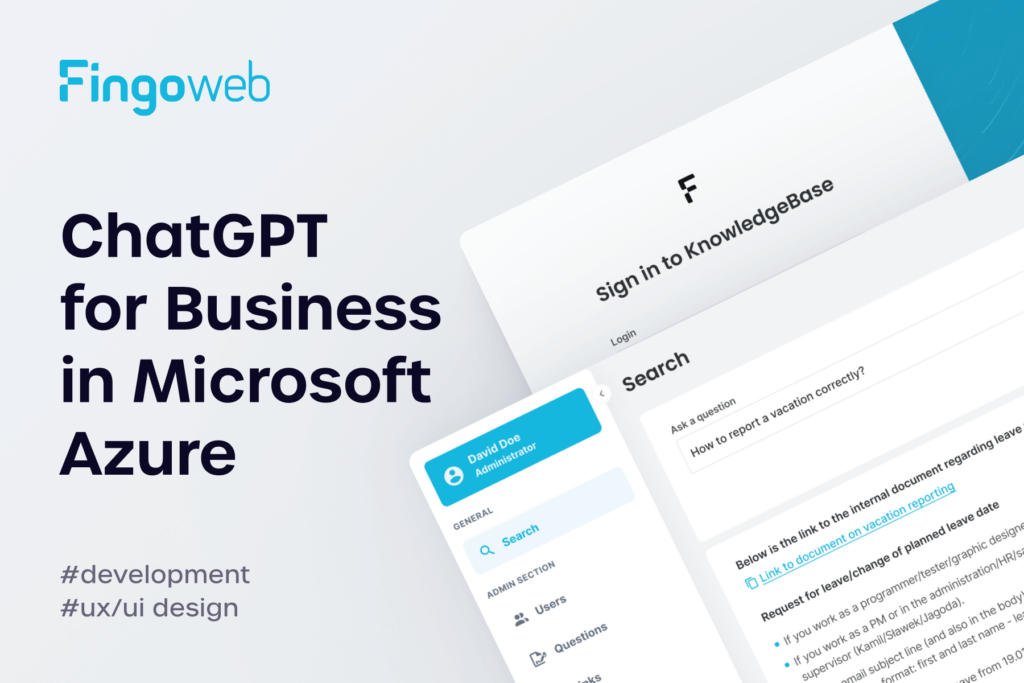

Fingoweb AI Knowledge Database. Our ChatGPT

As a software house in Kraków employing over 50 employees, we have a range of documents related to procedures, onboarding, and working with our clients. Using the LLaMA2 model, we have developed the Fingoweb AI Knowledge Database, a tool that allows for obtaining internal information using natural language.

The Fingoweb AI Knowledge Database aggregates organizational data and enables our employees to quickly and directly access the necessary information.

In the case of our internal database, we focused on:

- Organizational information regarding vacations, company operations, and organizational structure.

- Employee onboarding.

For your organization, the scope of data may be entirely different. LLaMA2 can work with PDF documents, practically any form of text, and spreadsheets.

The biggest advantage: In our case, the biggest benefit of launching the AI Knowledge Database was the reduction in the time it took to obtain information. Instead of writing to multiple people on Slack to find an answer, a simple interface allows us to receive it within seconds.

Access levels and security

Individual employees have access only to the level specified by the administrator. The model can be continuously updated with knowledge by adding additional files to the dataset.

It is up to you to decide what data you want to include in the tool, and the choice should be defined by business needs. For example, a company with a large sales department can build a solution that helps salespeople analyze notes from meetings with clients. The condition, of course, is having such notes in the first place.

Data and its quality

In the case of fine-tuning, the quality and quantity of data are crucial for the final result. The RAG method allows for dynamically swapping the knowledge base, so theoretically and practically, you can add just one PDF, and the tool will still work.

AI in your company

Building and implementing a tool for internal use within an organization can significantly increase competitiveness and shorten the time it takes to make key decisions. There are countless possibilities available on the market, and the only limitations will be budget and time.

AI tools are not a solution to all organizational problems. However, they can streamline processes and drive the organization towards digital transformation.

ChatGPT for business – frequently asked questions

Is building a ChatGPT tool expensive?

It all depends on the needs of your organization. Building a model from scratch will be more costly than fine-tuning an existing one, and the RAG method combined with an open-source model will be the most cost-effective in terms of finances and time.

What are the biggest advantages of a self-hosted ChatGPT?

First and foremost, data security and no fixed costs.

What types of data can we use with the RAG method for ChatGPT?

Any data format is acceptable, provided it is compatible with the model. LLaMA2, on which we built the Fingoweb AI Knowledge Database, supports PDFs, text files, and spreadsheets.